Publications

BestX Case Study - An Introduction to Algos

Our latest case study provides an overview of all the different metrics that BestX can calculate for algos, with the aim to help clients identify which ones are more or less relevant to the performance, given the type of algo selected. There is no 'one size fits all' so having an understanding of what the metrics are measuring allows the client to decide which ones are most important for their trading objective and best execution policy. This is also crucial in order to ensure you are selecting the right algo for the right task, and judging them appropriately for how they are designed to perform.

If you are a contracted BestX client and would like to receive the full case study please contact BestX at contact@bestx.co.uk.

Impact & Decay around WMR

Our latest research article analyses market impact and price behaviour around the WMR Fix.

In this paper we analyse market impact at the 4pm London Fix and measure the magnitude of speed of any subsequent price decay following the fixing window. Unsurprisingly given the very significant rise in volatility, we find that market impact has increased in 2020, and the impact around Q120 was markedly higher than previous quarters.

We also find evidence to indicate there is more permanent market impact (i.e. there is a smaller decay in the price) created at month ends compared to ‘normal’ days. This evidence would therefore suggest that, where possible due to mandate or operational constraints, it may be more optimal to rebalance on days other than month-ends.

Please email contact@bestx.co.uk if you are a BestX client and would like to receive a copy of the paper.

Quarter-end fixing performance

In this short article we briefly explore the performance at the 4pm London WMR Fix during the first quarter-end under viral lockdown. In the days leading up to quarter-end there was increasing market chatter that the fix could prove to be particularly challenging given the current market conditions.

Analysing the performance of a large sample of fixing trades does indeed confirm that overall slippage to the WMR 4pm benchmark was worse at Q1 quarter-end compared to recent ‘normal’ market conditions. In the chart below we plot the volume weighted average performance versus WMR 4pm for fixing orders traded on the following days:

a) 31st Dec 2019 to compare the previous quarter-end

b) 28th Feb 2020 to compare the previous month-end

c) 24th March 2020 to compare the same day the previous week

d) 31st March 2020

Underperformance to the 4pm Fix was clearly significantly worse than the previous quarter-end and month-end. If we assume that February month-end was the last relatively ‘normal’ month-end, then the difference to the performance seen on 31st March is extreme. We can also see that performance was starting to deteriorate through March, given the slippage observed on the 24th, as market conditions worsened.

However, as with all such macro analysis, the overall results mask the details. When we explore currency pair performance, we actually find that for a number of the majors (including EURUSD, GBPUSD, USDCAD and AUDUSD) performance at Q1 was similar or better than that experienced at Q4 quarter-end. Amongst the majors, USDJPY is a clear exception, where slippage to the 4pm Fix was considerably worse at Q1 quarter-end. It is in the less liquid pairs including Scandis, G10 crosses and Emerging Markets, that we see the more significant underperformance on 31st March, which seems to be in keeping with the anecdotal evidence from the market. The charts below illustrate the % increases in underperformance witnessed at Q1 compared to Q4 for a selection of currency pairs:

It also appears that many investors started the quarter-end roll process a number of days in advance of the 31st, which may have contributed to the relatively orderly market on the day itself, and the fact that performance vs the 4pm benchmark for most of the majors remained in line with previous quarter-ends.

Increased underperformance for fixing trades versus the 4pm London WMR benchmark at the end of Q1 2020 is obviously an expected result. Quantifying the magnitude is of interest, e.g. approx. three times worse than the slippage experienced at year-end, and illustrates just how abnormal current conditions are. It is clearly impossible to predict how conditions will evolve over the next few weeks, and given how many portfolios are mandated to minimise slippage to WMR, one could argue that there isn’t much that such managers can do to mitigate the underperfomance. Given that G10 crosses particularly suffered, it may be an argument to try to manage flow through the liquid traded crosses as much as possible? If there is discretion to move flow to other times of the day, or use other benchmarks, these market conditions, which are likely to persist for some time, may warrant investigating such options.

Measuring execution performance across asset classes

It doesn't matter how beautiful your theory is, it doesn't matter how smart you are. If it doesn't agree with experiment, it's wrong.

Richard P. Feynman

Transaction cost analysis means many different things to many different people, depending on the role or seniority of the user and whether the user specialises in a particular asset class or has cross-asset class responsibilities. This article explores some of these key differences in the context of the challenges that they raise when measuring costs and designing an execution analytics platform for such a diverse set of users. We’ll discuss post-trade analysis for now, given that is where the demand is currently focused and already raises enough complexities, and leave pre-trade for a later date. This subject matter is particularly pertinent to us at the moment as we plough ahead with the build of BestX Equities.

Cost definition

One key area that causes significant confusion across the industry is simply defining what transaction cost actually is. Depending on a user’s background and asset class interest or experience, ‘cost’ can be computed in a multitude of ways. Some examples are provided below:

1. Equities – costs within this asset class are often defined as slippage to a VWAP over a defined period, or Arrival Price, measured at the time the order arrives in the market.

2. FX – VWAP is impossible to compute accurately, so many individuals define cost as slippage to a mid price observed at either market arrival time or completion time.

3. Fixed Income – Far Touch, defined as slippage to the observed bid or offer at the completion time, is often referred to as the transaction cost

Compounding the complexities of the different definitions that different segments of the market have grown up with is the issue of inconsistencies in available data to measure costs, both time stamps and market data. For example, the early adoption of electronic trading in equities and the rise of an order-driven market structure resulted in widespread availability of accurate and multiple time stamps for a given order. Hence, the focus on Arrival Price as a cost definition within the equity asset class as market arrival time stamps are widely available and have been for decades. In OTC markets such as FX and Fixed Income, such time stamp data is often not available. Of course, transactions executed electronically tend to have some form of market arrival time stamp recorded, although there is not an industry wide definition of exactly what this time stamp represents. For example, for an FX trade executed electronically via an RFQ protocol, different platforms may measure the market arrival time as the time the buy-side trader submits the order whereas some may measure the time that the broker ‘picks up’ the order, or when they actually provide a quote. Fixed Income represents an even murkier picture than FX, with a large proportion of transactions outside of liquid sovereigns, dealt over the phone, resulting in even greater challenges in recording time stamps. Thus, equities and the majority of spot FX aside, the concept of measuring to an accurate and consistent market arrival time stamp is still a pipe dream.

However, there is a need to measure ‘cost’ consistently across a multi-asset class portfolio, and not just for regulatory cost and charges disclosures or obligations. Heads of Trading wish to see the total cost across their desks and traders, CIOs and best execution committees require regular reporting of total execution costs of the business etc.

Solution – BestX Factors

At BestX we have taken the following approach to provide a solution to this problem. The diagram below in Figure 1 summarises the issue, whereby each asset class has its own specific benchmarks and metrics they focus on, but where possible, there are a subset of common metrics, that are measured on a consistent basis and therefore can be aggregated accordingly.

Figure 1: Cost/benchmark metrics across asset classes

In the design phase of BestX we focused on the need to allow users to select the execution factors they wish to measure, which are relevant to their business and the way they execute. This was also a requirement to ensure the product was deemed compliant for MiFID II. These BestX Factors, an example of which is shown in Figure 2, form a core part of the platform, and provide the ability to view multiple, alternative views of ‘cost’ in the broadest sense.

Figure 2: Example BestX Factors

The two BestX Factors relevant to this particular discussion are:

1. Spread

2. Price

We have defined ‘Spread’ as our core cost metric, which is measured to an independent mid-price observed in the market at the completion time of the trade. This metric sits at the centre of the Venn diagram above, and allows a user with cross-asset class responsibilities to aggregate a measure of cost. Why did we choose completion time? For the reasons described above, generally the one common time stamp that we are provided across all asset classes is the completion time (although even this may be subject to gaps and errors, especially from, for example, sub-custodians). We do provide clients with the option of having everything measured at an arrival time stamp, if this is available for all of their trades across all asset classes, although in our experience this is a minority at this stage.

The inconsistency of time stamp availability also creates issues for other metrics, such as Implementation Shortfall. The ‘classic’ view is to measure slippage with reference to the earliest time stamp, which is typically ‘order origination time’, i.e. when the portfolio manager first raises the order. This is typically only available from the Order Management System (OMS) of the client, as it is not passed through to the Execution Management System (EMS) or executing broker. However, it is rare for an OMS to have all the details of individual fills, e.g. which venue an order was filled on, passed back to it and stored. This raises additional complications in that data needs to be stitched together from multiple sources if the user is to get a complete picture.

Another option is to measure with reference to an arrival time stamp, but as already discussed, this is not always available. Indeed, for one institution, they may have such origination or arrival time stamps available for some trades but not others. When wrestling with this issue for FX when building BestX in early 2016, we took the decision to effectively ‘reverse’ the shortfall measure, and go back in time measuring slippage to the completion time, which was the only point we knew clients could provide us. This at least allowed us to calculate measures that could cope with missing or incomplete trade lifecycles. Not ideal but a purely pragmatic decision to cope with gappy data and as these gaps fill in over time we will revisit this definition.

It is also essential that the common cost metric is computed in a way that can be aggregated across asset classes, for example, always convert into basis points which is a common unit (rather than pips or price cents etc). This issue is particularly complicated in Fixed Income where users may wish to see costs computed in a variety of ways e.g.

i) Computed on price, but measured in cents,

ii) Computed on price, but measured in basis points,

iii) Computed on yield, as a number of fixed income sectors trade on yield rather than price.

We then use the ‘Price’ factor as a way of accommodating all of the other cost measures that specific asset classes require e.g. VWAP in equities. Clearly, such metrics cannot necessarily be aggregated across every trade, so these metrics tend to fall in the other segments of the Venn diagram in Figure 1. In some cases, there may be commonality for some benchmark measures e.g. a client may have market arrival time stamps for their equity and FX trades, but not their fixed income trades. It is therefore also key that the factors can be configured by asset class to allow such aggregation of a subset of trades.

In this way the user should be able to ‘have their cake and eat it’ in terms of common aggregation and also asset class specific cost metrics. Why is this so important? The diverse array of users of TCA software at an institution necessitates such flexibility e.g.:

- A bond trader will want to see Fixed Income specific measures, such as cost computed on a yield basis, or slippage to Far Touch on an individual trade level, whereas the Global Head of Trading at such an institution may want to see aggregate summary reports of costs across all asset classes, whilst retaining the ability to drill into the Fixed Income specific view if required.

- Users within oversight functions such as Risk and Compliance will typically wish to see metrics computed in such a way that provide meaningful exception trades, but they also need to ensure that when such exceptions are discussed with the trader responsible the conversation makes sense to both parties.

- Regulatory and client reporting teams require consistent cost metrics that allow portfolio costs to be computed and aggregated accurately. Such teams will have less interest in idiosyncratic asset class specifics.

In conclusion, it is clear that building a cross-asset class TCA product is more complicated than perhaps you’d first think. The devil really is in the detail and it would already be a very large can of worms without all of the issues caused by inconsistent and missing data across the industry. Starting with a purist view to design a theoretically beautiful product is obviously a laudable initial objective, but this quickly starts to unravel when you have to get it working in the real world, dealing with many different users who have very different requirements whilst also trying to consolidate and normalise extremely messy data. This article explored some of the trickier areas where pragmatic solutions have been required to try to solve for some of the problems that have arisen during our development process. The ultimate objective is obviously to deliver a product solution, that caters to a diverse user base whilst providing the ability to measure costs consistently across FX, Fixed Income and Equities. We are on target to deliver on this objective by the end of the year.

Algo Performance by Market Regime

Our latest research article is a further extension to our previous work on measuring and predicting liquidity and volatility regimes.

In this paper we investigate a practical application of the regime framework by analysing the performance of different algo styles within different regimes. As one would intuitively expect, choosing the right algo, or even style of algo, for the prevailing market conditions is an important decision component in the overall best execution process. We find that the performance difference across different regimes can be significant, for example, for the Get Done style, slippage vs arrival price can fluctuate by 2.5bps on average across different volatility regimes. We believe that trying to adopt a more rigorous approach to algo selection, that is both data driven but combined with trader intuition, represents a positive step forward.

If you are a client of BestX and would like a copy of the paper, please email support@bestx.co.uk.

Assessing the quality of execution at the WMR Fix

Our latest research explores the application of the BestX Fill Position metric to assess the quality of the WMR 4pm Fix.

Interestingly, for most currency pairs analysed, the 4pm Fix appears to deliver prices centred around the mean of the day on average. There are some exceptions, notably some EM pairs, and also GBPUSD when analysing month-end dates only. However, further research showed that a similar quality of average Fill Position can also be achieved, with considerably reduced risk, by trading TWAP over liquid trading ranges.

If you are a BestX client and would like to receive the research paper please email contact@bestx.co.uk.

Liquidity & Execution Style Trends

Great things are not accomplished by those who yield to trends and fads and popular opinion.

Jack Kerouac

In this short article we thought it would be interesting to highlight some trends that we are observing across the FX market with respect to liquidity and execution style. We particularly focus on a couple of the common themes, discussed ad nauseam on panels at the various industry conferences, namely:

- Fragmentation – is this really happening or not?

- Is the nature of liquidity changing?

- Algos – is their usage increasing or not?

- What are the most commonly traded algo types?

Fragmentation

We analysed the distinct number of ‘venues’ that have been tagged in trade data that BestX has analysed over the last couple of years. Given the lack of consistency in naming conventions it is difficult to provide a precise analysis e.g. some venues were tagged using codes that were either impossible to interpret or simply classified by the liquidity provider as ‘other’. In addition, it is rarely possible to distinguish between a ‘venue’ that is simply a liquidity provider’s principal risk stream, and those that are dark pools of client orders allowing true crossing. Finally, all price streams from one liquidity provider are grouped together as one venue.

It is for these reasons that we describe ‘venue’ loosely, i.e.

- an ECN

- a liquidity provider’s principal book (bank and non-bank)

- dark pools or internal matching venues (where identified)

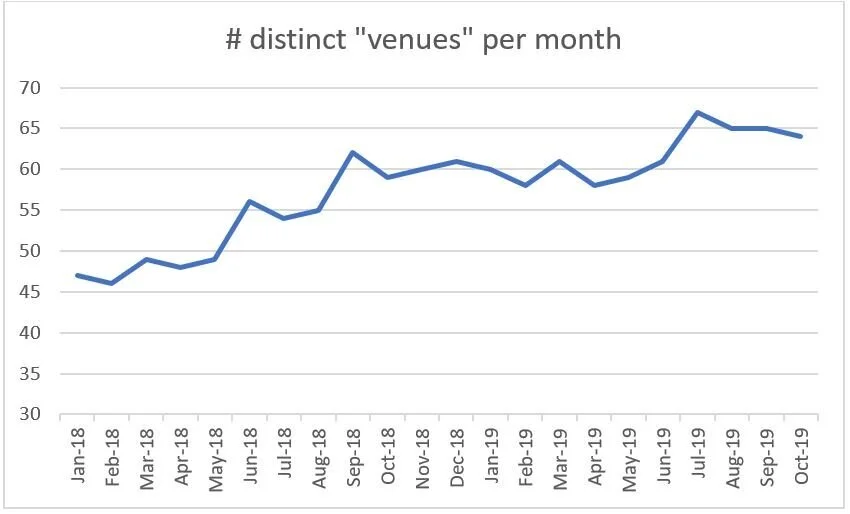

Given these assumptions, the count of distinct venues that BestX has observed since January 2018 on a monthly basis are plotted below, increasing from 47 in January 2018 to a peak of 67 in July 2019. Such fragmentation is also cited in the recent published BIS quarterly review [1].

Please note, that the absolute numbers are probably underestimated given the assumptions mentioned above, and especially due to the number of indistinguishable codes grouped into the ‘Other’ category. However, the trend is clear and, although it is uncertain how much of the increase is due to truly new venues, there is no doubt that algos are increasingly accessing a wider set of liquidity pools.

Liquidity Type

Digging a little deeper, we then explored if the nature of the liquidity that was been accessed was changing. More ‘venues’ doesn’t necessarily mean more liquidity given the issue of recycling, or improved performance, given the possibility of increased signalling risk.

In terms of volumes traded, our dataset confirmed what is widely cited in the trade press, in that the share of volume traded on the primary FX venues is decreasing. The chart below shows the proportion of volume traded in Q1 18 compared to Q1 19, and the primary venue share has fallen from 11% to 8% over this period.

Interestingly, the ‘winners’ over this same period are clearly the bank liquidity pools, which in this dataset are a mixture of electronic market making books and client pools, but in both cases can be classified as ‘dark’. The increase over this period was from 43% to 61%.

The Use of Algos

So, it is clear that the variety of liquidity, and nature of the that liquidity, is changing. We now turn our attention to execution styles, and in particular, focusing on whether the use of algos in FX is increasing or not. Indeed, Greenwich Associates reported recently that they had found FX algo usage had increased by 25% year on year [2]. FX algos have been around for a long time, with some market participants referring to the first algo execution way back in 2004. However, adoption has been slow, with anecdotal evidence indicating that algo volumes have only really started to pick up in the last couple of years.

Our research shows that the % of overall spot volume now traded algorithmically has increased significantly in recent years, as illustrated in the chart below, with algo market share increasing from 26% in 2017 to 39% in 2019 year to date.

There may be some bias in these numbers from an absolute perspective due to the nature of the BestX client base, which tend to be more sophisticated execution desks which are well-versed in the use of algos. The trend, however, is very clear and we have seen evidence of increased algo adoption across the industry, both in terms of increased use by seasoned algo users, and adoption by clients that are new to algos.

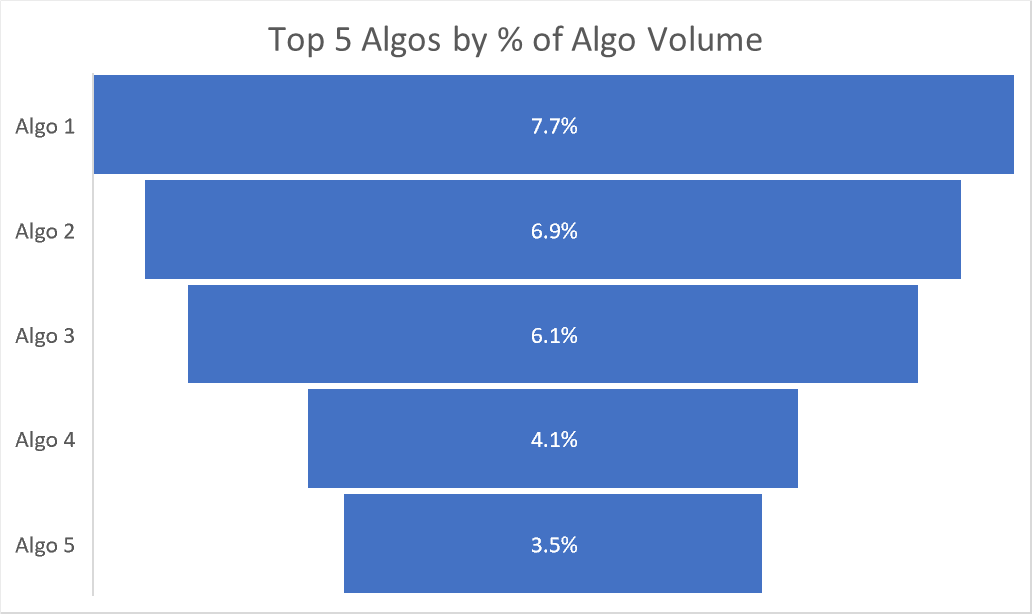

Over the last couple of years, we have seen over 170 different algos used by clients of BestX. The algo type or style that is currently seeing the largest market share is TWAP, with almost 18% of algo volume traded in this style. In terms of market share, the next 5 algos hare approximately 28% of the volume, as illustrated below:

Looking at the volume traded per algo, if we exclude the TWAP category, the average market share is 0.5%, and the distribution of market share has a standard deviation of 1.1%. There is a long tail in the distribution, with the top 20 algos accounting for just under 60% of the volume.

Conclusions

Over recent years the FX market has clearly witnessed a proliferation in the numbers of liquidity ‘venues’ and the number of algorithmic products made available to end investors. Forecasting the future is clearly prone to error, but it feels instinctively that the proliferation is coming to a natural end, and, could possibly reverse given the ever decreasing margins. The issues with phantom and/or recycled liquidity are well known, which indicates that the future state may result in less venues, with the landscape comprised of a more distinct collection of unique liquidity pools. This naturally points to the continued significance of large, diversified pools that large market-making banks can support. The increased use of independent analytics and more transparency provides investors with greater comfort when executing in such ‘darker’ venues. Indeed, a number of voices across the industry are questioning the post MiFID II drive for OTC markets to follow an equity market structure path into multi-lateral lit central limit order books, and specifically asking the question whether this lends itself to achieving best execution for the end investor.

The same argument may also be applied to the algo product proliferation, especially given the general investor desire for simplicity, coupled with performance, a perfect example of ‘having your cake and eating it’. However, increasing regulatory obligations on the buy-side, for example the Senior Managers Regime in the UK, will probably reinforce the requirement for complete transparency on how an algo is operating, and the liquidity sources that it is interacting with.

A final conclusion from the study is how difficult it is to aggregate data within the FX market given the lack of standard nomenclature and definitions e.g. we see one of the primary venues described in 6 different ways within BestX. Establishing some standard market conventions, including naming conventions for venues, liquidity providers and standard definitions of time stamps across the trade lifecycles, would be extremely beneficial to the industry!

BestX Case Study: Interpreting Signaling Risk

Our latest article continues our series of case studies for the practical deployment of the BestX execution analytics software. Please note these case studies should be read in conjunction with the BestX User Guide, and/or the online support/FAQs. The focus of this case study is the interpretation of the different signalling risk metrics available with the BestX product.

The BestX Signaling Risk and Signaling Score metrics are complementary, and when used in conjunction, can be used to understand potential execution behaviour. In this case study we illustrate how the metrics can help identify 3 different categories of execution:

‘Market Momentum’ trades, i.e. those potentially chasing momentum, or trading too quickly

‘Averaging In’, i.e. where an algo is effectively supplying liquidity to the market

‘Mean Reversion’, i.e. the ‘hot potato’ trade

If you are a contracted BestX client and would like to receive the full case study please contact BestX at contact@bestx.co.uk.

Regime Change: An Update

Our latest research article is a follow up to the paper we published in April this year, where we introduced a framework for analysing and predicting regimes in the FX markets.

In this update we extend the research to predict regimes in terms of level, e.g. what is the probability over the next hour that USDJPY will be in a high volatility regime, whereas previously we were focused on changes in volatility. In addition, we publish out of sample performance results for a wider set of currency pairs, with the model having a predictive performance ranging between 70-90% for both volatility and liquidity regimes.

Please email contact@bestx.co.uk if you are a BestX client and would like to receive a copy of the paper.

Signal and noise in the FX market

In our latest research article we explore a method for attempting to estimate how ‘informed’ the trading activity is in the FX market over a given period of time.

This method is based on the VPIN metric, or Volume Probability of Informed Trading, which has been applied in other asset classes. Using VPIN in OTC markets such as FX is more challenging due to the lack of absolute volume numbers, and moreover, within FX the diverse set of trading objectives (e.g. hedging, funding, retail etc) which add additional noise.

However, in this paper we explore a method for computing this metric using an approximation of volume and discuss potential applications. The initial results do appear intuitive, for example, we observe an increasing proportion of ‘informed’ trading leading up to the 4pm WMR Fix. Our research into this field will continue as we think there are potential applications in enhancing our Pre-Trade module further e.g. in selecting an execution protocol or algo, or determining how long to run an algo for, or how passive to be etc.

Please email contact@bestx.co.uk if you are a BestX client and would like to receive a copy of the paper.

The Complexities of Peer Analysis

How to make sure you are comparing apples with apples – complexities of peer analysis

A horse never runs so fast as when he has other horses to catch up and outpace.

Ovid

We’ve been asked in the past to provide functionality to allow an institution to compare its execution performance against peers on a relative basis. This practice is widely used in equities and is often discussed and used at board level of both managers and asset owners. Applying peer analysis in a market such as FX is more complex in our opinion, largely due to the heterogenous nature of the participants who transact FX. As we have discussed before here, the fact that there are many different trading objectives arising from the varied nature of the FX market participants, means that it becomes more complex when applying a simple equity peer analysis to FX.

Like all BestX enhancements, peer analysis has been backed up by academic research. Recently, the ECB and IMF released a paper that indicated that transaction costs in the FX market are dependent on how 'sophisticated' a client is. They found that more sophisticated clients, e.g. ones that trade larger and more numerous tickets with a wider variety of dealers, achieve better execution prices than those that are less sophisticated. This highlights the need for another level of comparison; you might be performing well against a benchmark, but are you doing as well as your comparable peers against such a benchmark?

Classically, within peer analysis, you may decide to analyse net trading costs versus a specific benchmark (e.g. for equities, VWAP is commonly used) for a sector of the institutional community (e.g. ‘real money’ managers). However, for FX, the ‘real money’ manager peer group may be trading FX for many different reasons, which requires the use of a varied range of benchmarks and metrics to measure performance appropriately.

For example, within the real money community, many passive mandates may be tracking indices where NAVs are computed using the WMR 4pm. In this example the manager is very focused on minimising slippage to the WMR Fix benchmark, and other benchmarks such as Arrival Price or TWAP, may be irrelevant. It would therefore be inappropriate for a passive manager, whose best execution policy for these mandates is aimed to minimising slippage to WMR, to be compared to their peers on performance versus, for example, arrival price.

Enter the BestX Factors concept. BestX Factors was one of the core concepts upon which our post-trade product was built, allowing a client to select the execution factors, and performance benchmarks, that are relevant to their business and execution policy. Through BestX Factors, clients can select specific benchmarks, and apply these if required to only specific portfolios, or trade types etc. The BestX Peer Analysis module is also governed by BestX Factors, allowing clients to construct Peer Analysis reports that are specific to the benchmarks relevant to their style of execution.

Furthermore, a static report providing high level relative peer performance only provides a broad picture and can mask key conclusions that may help identify where attention and resources should be focused to help improve performance.

For example, having the ability to inspect the peer results by product adds an extra layer of value. It may be that a specific client is performing extremely well versus the peer group when it comes to the Spread Cost for Spot trades but may perform less well for outright Forward trades.

In addition, further breaking out results by currency pair helps isolate other aspects where performance may be improved. Results vs Arrival Price for G10 may rank in the top quartile, but NDF performance may look less impressive when compared to the peer group.

In our view, therefore, it is important that any Peer Analysis is done in a way that allows such granular inspection of the results, thereby allowing the product to add real value rather than simply tick another box, ‘yeah, sure, we do peer analysis, we consistently come in the top quartile’. Nice to know, but as with our philosophy in general across the BestX product, it seems sensible to use the analysis to add real value if possible.

A challenge of such granularity, of course, is ‘analysis paralysis’ as the amount of data can become overwhelming quite quickly. Nobody has the time to search through tables of results, trying to figure out the good and bad bits and what to do about it. We turn to another of our core philosophies here, which is turning big data into actionable smart data. Visualisation is critical in achieving this, and we return to our ‘traffic light’ concepts to help quickly highlight what is going on in a given portfolio of results.

The trophy icons simply indicate when a client is on the podium in terms of performance for that particular metric, whereas the traffic light colour indicates which percentile band the performance falls into.

One of the other key factors to consider, when conducting peer analysis, is the size of the pool of data to ensure that any output can be analysed with confidence. For this reason, we have waited to launch our Peer Analysis module until our anonymised community data pool for the buy-side had become large enough. We feel we are now in that position, with a buy-side anonymised community pool comprised of millions of trade records. We therefore launched our new Peer Analysis module for the buy-side as part of our latest release last weekend.

In conclusion, comparing performance to peers across the industry provides an additional input to the holistic view of best execution that the BestX software seeks to provide a solution for. It should be used in conjunction with the other metrics and analysis available throughout the product (for example, the fair value expected cost model) and clearly only focuses on relative performance. Peer analysis should, therefore, be used carefully, especially when applied to FX, due to the very heterogenous nature of the market. The concept of client ‘sophistication’ is interesting and one that we are exploring further to see if that can be added at a later date to provide an additional clustering of the data (i.e. to allow clients of similar sophistication to compare themselves).

The Future of Best Execution?

Tomorrow belongs to those who prepare for it today

Malcolm X

In years to come it feels we will probably look back at this period of history in the financial markets and recognise that this era was defined by incredibly rapid change, driven largely by technology. The democratisation of computing via the cloud, providing accessibility to cheaper, faster processing power is allowing the financial markets to harness the vast amounts of data produced and stored every day. This is not only delivering the ability to harness ‘big data’, but more importantly in our view, turn these vast data pools/lakes/oceans into actionable ‘smart data’.

So, what has any of this to do with Best Execution? Well, in this article we explore where we think the future of Best Execution may evolve, driven by this increasingly accessible technology, data and analytics.

The Present

Before we embark upon envisioning the future, lets recap on the present. To clarify what we are talking about it is worth reiterating the definition of best ex, at least from a regulatory perspective. MiFID II uses the following definition:

“A firm must take all sufficient steps to obtain, when executing orders, the best possible results for its clients taking into account the execution factors.

The execution factors to be taken into account are price, costs, speed, likelihood of execution and settlement, size, nature or any other consideration relevant to the execution of an order.”

Source: article 27(1) of MiFID

How does this regulatory definition translate in practice? In our view, best ex can be summarised from a practical perspective via the following, many of which we have explored in detail in previous articles:

· Best execution is a process

· Covers lifecycle of a trade, from order inception, through execution to settlement

· Requires a documented best execution policy, covering both qualitative and quantitative elements

· Process needs to measure and monitor the execution factors relevant to a firm’s business

· Any outlier trades to the policy need to be identified, understood and approved

· Requires continual performance assessment, learning and process enhancement, i.e. a best ex feedback loop

Our experience indicates that there is a wide spectrum of sophistication with regards to the implementation of best execution policies and monitoring. However, there are institutions at the ‘cutting edge’, who are clearly helping define the direction of travel for best execution in the future. As already discussed, technology, data and analytics are key enablers here, but the over-arching driver for change is the pressure on returns and need to control costs. This is resulting in the need for execution desks to increasingly automate workflows where possible as fewer traders are asked to trade more tickets, more products, navigate increasingly complex market structures and utilise a wider array of execution protocols and methods. With this in mind, it helps craft a vision of where best execution may evolve over the next decade.

The Future

To structure the vision, we define the process of best execution process into the following 3 components:

1. Order Inception

2. Order Execution

3. Execution Measurement

A general statement to kick off is that we feel the trend for flow to be split between high and low touch will continue, and this bifurcation will run as a theme throughout the future state. Traders will have to prioritise the tickets that require their attention, which may be the larger trades, the more complex trades, the less liquid etc. Broadly speaking, such trades will be defined as ‘High Touch’, and everything else will fall into the ‘Low Touch’ category. The rules for defining the boundary between high and low will obviously vary considerably by institution and will be a function of the size and complexity of the business, the number of available traders and the strategic desire, or not, to automate.

1. Order Inception

As summarised in the figure below, we anticipate that at the order inception stage, there will be a differentiation in high vs low touch orders in that the former will be generally subjected to some form of pre-trade modelling to help optimise the execution approach.

Such pre-trade modelling will ultimately be carried out at a multi-asset level, where appropriate, such that an originating portfolio manager would be able to assess the total cost of the order at inception, including any required FX funding or hedging, as illustrated in the example below:

A natural consequence of such pre-trade modelling at inception is the evolution of the relationship between the portfolio manager and execution desk, whereby the traders become ‘execution advisors’, having a proactive dialogue with the manager on the optimal execution approach to be adopted, via a combination of market experience and analytics.

Clearly, low touch flow would not follow the same protocols. Here it is likely that rules will be deployed to determine the optimal execution method, again driven by analytics and empirical evidence. However, it is still potentially relevant to have pre-trade estimates computed and stored to allow an ex-post comparison, which may be valuable over time to help refine the rules via ongoing monitoring and feedback.

2. Order Execution

Moving to actual execution, the pre-trade work done for high touch flow clearly allows informed decisions to be made, that are defensible and can be justified to best ex oversight committees, asset owners and regulators.

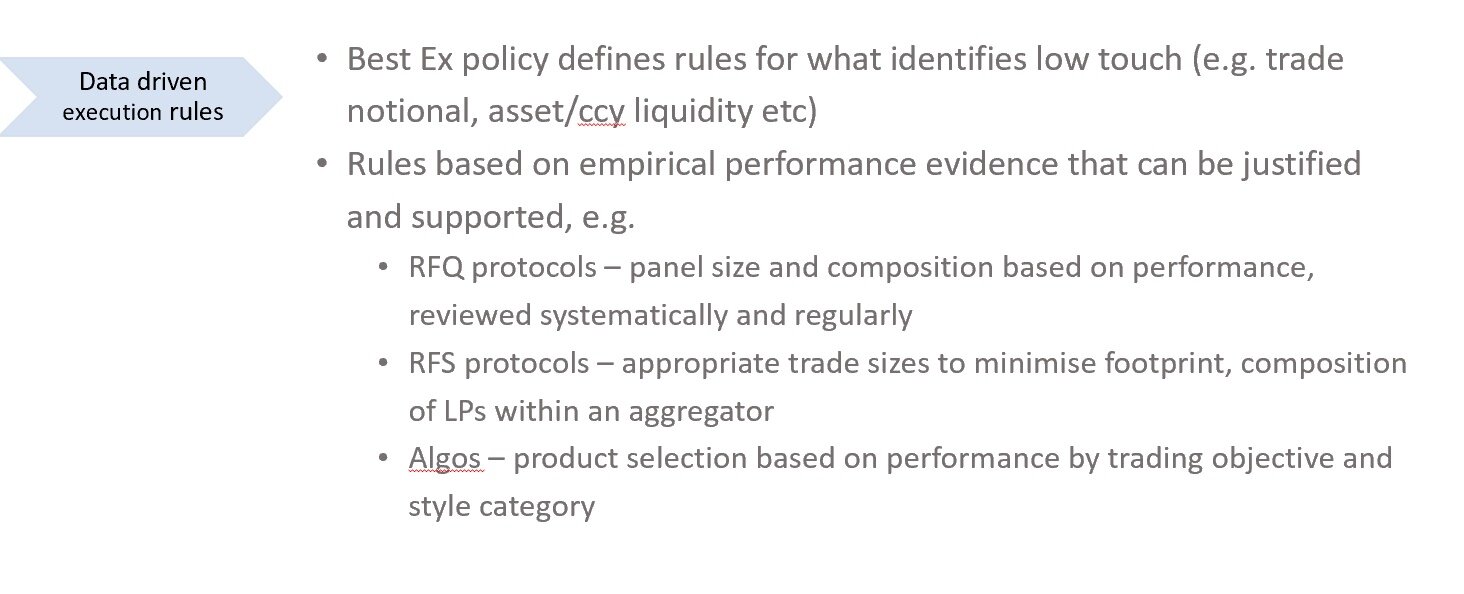

The definition of execution rules for low touch, based on objective analytics and empirical evidence, also allow such justification to be carried out. Some examples are provided below:

Trader oversight is obviously going to remain critical, allowing over-rides of rules at any point to accommodate specific market conditions on any given day. In addition, a key component will be to rigorously monitor the performance of the pre-trade modelling for high touch, and also the rules for defining execution methods for low touch. This performance monitoring, defined to focus on the execution objectives relevant for the institution in question, and will require a regular review of outcomes to establish if changes need to be made to any of the rules.

3. Execution Measurement

Monitoring and measurement of performance will be carried out at 2 levels: tactical and strategic. Tactical is required on a timely basis, and we would envisage the future state for this would be real-time, or near to real-time, which allows every trade to be monitored, both high and low touch, to identify any outliers to the best ex policy.

Strategic monitoring would be a more systematic measurement of performance over large, statistically significant data samples. Clearly, the frequency of this will be dictated somewhat by how active a given institution is e.g. smaller institutions may not generate enough trades to have a large enough sample to draw conclusions from, say, quarterly analysis. Larger, more active institutions may find that monthly reviews are appropriate. Clearly, market conditions may also dictate adhoc reviews, e.g. counterparty defaults, market structure changes, financial crises etc. However, whatever the frequency, it is essential that systematic oversight is required to ensure that the rules coded to drive the bulk of execution are producing satisfactory and optimal results. The old cliché of you can only manage what you can measure is never truer than in this particular case.

Conclusion

Although the future state we envisage in this article may take a number of years to evolve, and indeed, may evolve in a very different form, there are a number of trends that are already observable in the industry which indicate that significant change is coming.

Across both the buy and sell side, the trend to do ‘more with less’ feels unstoppable, and this will result in an increased deployment of technology and automation. However, we don’t anticipate a totally AI driven world, ‘staffed’ by trading bots. The random nature of financial markets makes it impossible to code for every eventuality, and this won’t change. Market experience and human oversight will always be required, albeit as we have indicated, traders are having to trade more tickets, be responsible for more products, whilst navigating more complex market structure and increased number of execution protocols and products.

At the core of this future state will be data driven insights and analytics, which will also satisfy the ever-increasing governance demands and evidencing of best execution, from both regulators and asset owners.